What is Explainable AI and Why it Matters in Enterprise Applications

Artificial Intelligence (AI) is transforming the modern enterprise, automating processes, uncovering insights, and supporting high-stakes decision-making across industries.

Yet as AI models grow more powerful, they also become more complex, often operating as opaque “black boxes.” When organizations cannot understand how or why an AI system produces a certain outcome, trust erodes, adoption slows, and compliance risks increase.

This emerging challenge has given rise to Explainable AI (XAI), a critical discipline focused on making AI systems transparent, interpretable, and understandable for human users. As organizations lean deeper into AI-driven operations, XAI becomes essential for responsible and effective enterprise adoption.

TL;DR

- Explainable AI (XAI) makes AI systems transparent, interpretable, and justifiable, helping humans understand how AI produces decisions and outputs. This enhances trust, accountability, and adoption in enterprise workflows.

- Enterprise Benefits & Use Cases: Supports regulated industries like healthcare, finance, and legal by ensuring compliance, improving model performance, strengthening human–AI collaboration, and enabling informed decision-making across operations.

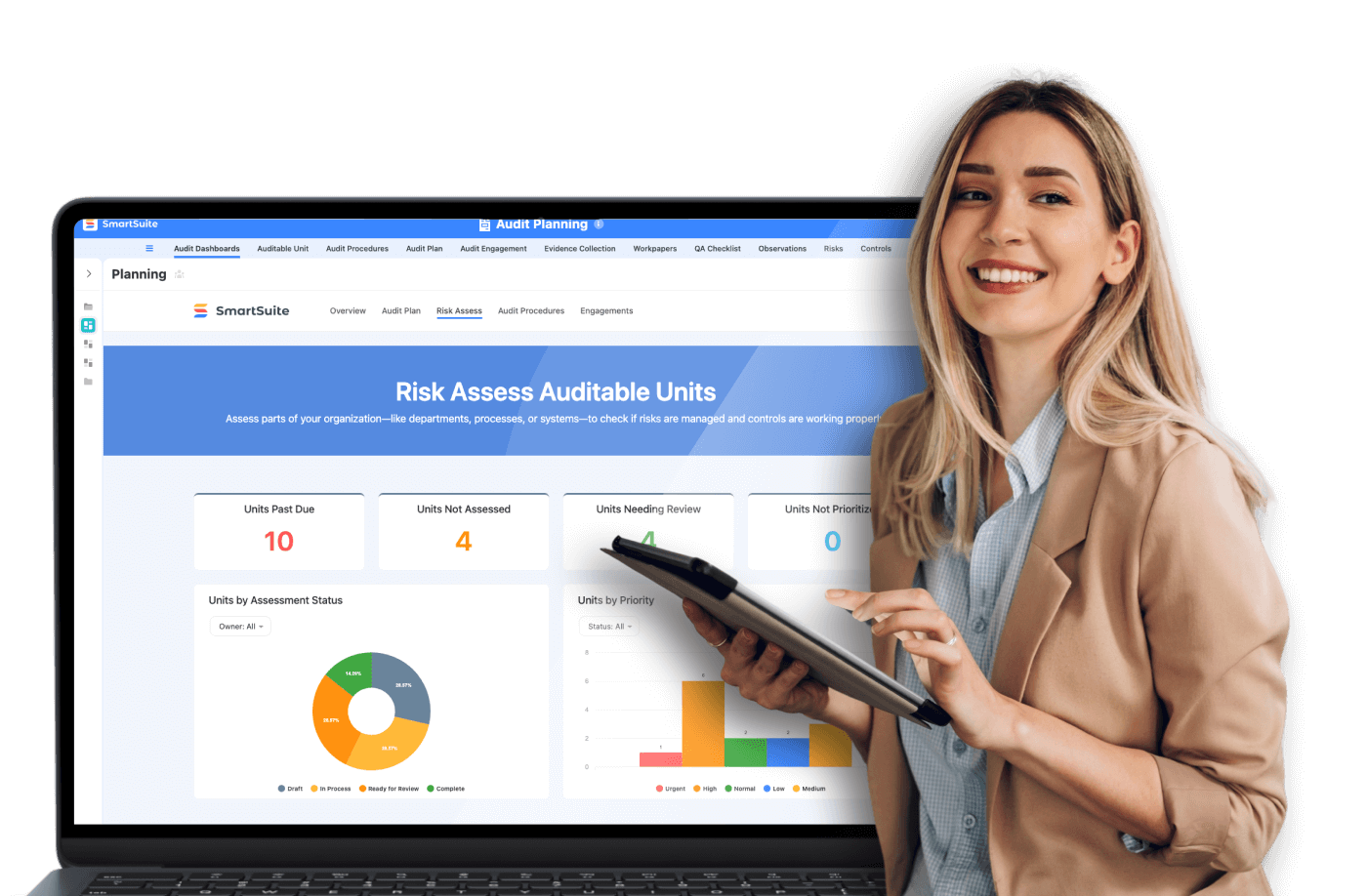

- SmartSuite provides built-in explainability, centralized AI governance, human-AI collaboration tools, model performance monitoring, and integration with external AI systems, operationalizing XAI to make AI insights actionable, auditable, and trustworthy.

What is Explainable AI (XAI)?

Explainable AI refers to the set of methods, systems, and practices that make AI-generated results understandable to humans. Rather than delivering opaque outcomes, XAI enables users to see the logic, rules, or patterns behind automated decisions.

Key Characteristics of Explainable AI

Transparency

Clear visibility into how AI models analyze data and arrive at conclusions.

Interpretability

The ability for end-users, technical or non-technical, to understand model behavior and identify the drivers behind specific outcomes.

Justifiability

Reasoned explanations for decisions to support ethical review, regulatory compliance, and business accountability.

Reliability

Monitoring and feedback loops that help organizations validate and improve the performance of AI systems over time.

Why Explainable AI Matters in Enterprises

Enhanced Trust and Adoption

For many leaders, AI adoption stalls because they cannot validate how systems make decisions. XAI eliminates this barrier, giving stakeholders confidence in automated insights, especially in regulated or high-impact environments such as healthcare, finance, or legal services.

Regulatory Compliance

Global regulations increasingly require organizations to explain and justify algorithmic decisions. XAI helps organizations:

- produce audit-ready explanations

- demonstrate fairness and accuracy

- address GDPR, CCPA, and emerging AI governance obligations

Improved Model Performance

Explainability shines a light on model weaknesses: bias, drift, or incorrect assumptions, empowering data teams to refine, tune, and retrain models more effectively.

Strengthened Human–AI Collaboration

Enterprise workflows increasingly blend human judgment with AI recommendations. When employees understand how AI arrives at its guidance, they can confidently validate, augment, or override results, improving outcomes and organizational alignment.

Enterprise Use Cases for Explainable AI

Healthcare

Clinical risk scoring, diagnostics support, and patient outcome prediction benefit greatly from transparent AI recommendations that physicians can interpret and trust.

Finance & Banking

Loan approval, fraud detection, risk analysis, and investment modeling require explainable decisions to ensure fairness and meet regulatory standards.

Manufacturing

Predictive maintenance and quality control models improve when engineers understand the factors that drive failure predictions or defect alerts.

Retail & Customer Experience

Recommendation engines and segmentation models become more powerful when marketing and operations teams understand the behavioral drivers behind consumer patterns.

Legal & Compliance

Explainability supports contract review, policy evaluation, and audit automation, helping teams validate results and maintain trust throughout regulatory scrutiny.

Key Challenges in Implementing Explainable AI

Balancing Complexity and Interpretability

Simpler models are easier to explain but may lack accuracy; more complex models deliver precision but often lack transparency. XAI seeks the optimal middle ground.

Protecting Proprietary Algorithms

Organizations must provide explainability without exposing sensitive intellectual property or competitive advantages.

Bias, Fairness, and Ethical Alignment

Explanatory models must avoid perpetuating bias. Diverse training data, ethics reviews, and alignment checks are crucial.

Infrastructure and Integration

XAI often requires new tools, governance processes, and integrated workflows, demanding modernized architecture and scalable data strategies.

Actionable Insights for Enterprises

- Invest in Explainable AI tooling to improve transparency and reduce risk

- Promote organization-wide data literacy to help teams understand AI outputs

- Implement rigorous model validation to ensure fairness, accuracy, and reliability

- Engage ethics experts to keep AI decisions aligned with organizational values

How SmartSuite Supports Explainable AI in the Enterprise

SmartSuite provides a structured, transparent foundation for operationalizing Explainable AI across enterprise workflows. By integrating governance, visibility, and human–AI collaboration into everyday processes, SmartSuite helps organizations make AI both powerful and accountable.

Built-In Explainability for AI-Assisted Workflows

SmartSuite enables teams to access:

- human-readable explanations for AI recommendations

- rationales behind automated scoring, prioritization, or routing

- decision logs showing how rules, data, and models drive outcomes

This equips teams to trust, review, and validate AI-generated insights.

Centralized AI Governance & Compliance

SmartSuite offers a governance framework that includes:

- documentation for AI assumptions and criteria

- audit-ready reporting for AI fairness and accuracy

- data lineage visibility to trace how information influences outcomes

This helps organizations align with regulatory and ethical requirements.

Human-AI Collaboration Tools

SmartSuite keeps humans in the loop with:

- inline feedback on AI-generated insights

- approval steps for sensitive decisions

- collaborative discussion threads

AI decisions become part of a transparent, multi-team review process.

AI Model Performance Monitoring

SmartSuite supports responsible AI operations with:

- dashboards tracking accuracy, drift, and anomalies

- automated alerts when behavior deviates from expectations

- version controls for rules, workflows, and model configurations

Seamless Integration With XAI Systems

SmartSuite connects with external AI platforms to:

- import explanations from third-party models

- orchestrate workflows triggered by AI insights

- consolidate model outputs, business rules, and human commentary

This makes SmartSuite the operational hub that unites data, AI, and explainability.

Conclusion

Explainable AI is essential for building trust, ensuring accountability, and enabling ethical AI adoption across modern enterprises. As organizations increasingly rely on AI for mission-critical decisions, explainability offers clarity, fairness, and strategic control.

By combining human understanding with AI intelligence, enterprises unlock greater innovation while reinforcing responsible governance. Platforms like SmartSuite help operationalize this vision, connecting explainability to real workflows, real decisions, and real business outcomes.

Get started with SmartSuite Governance, Risk, and Compliance

Manage risk and resilience in real time with ServiceNow.